Fibonacci Sculptures - Part II

Fibonacci Sculptures - Part II

These are 3-D printed sculptures designed to animate when spun under a strobe light. The placement of the appendages is determined by the same method nature uses in pinecones and sunflowers. The rotation speed is synchronized to the strobe so that one flash occurs every time the sculpture turns 137.5º—the golden angle. If you count the number of spirals on any of these sculptures you will find that they are always Fibonacci numbers.

© John Edmark

More Posts from Jupyterjones and Others

gotta catch em all

The Sun Just Released the Most Powerful Flare of this Solar Cycle

The Sun released two significant solar flares on Sept. 6, including one that clocked in as the most powerful flare of the current solar cycle.

The solar cycle is the approximately 11-year-cycle during which the Sun’s activity waxes and wanes. The current solar cycle began in December 2008 and is now decreasing in intensity and heading toward solar minimum, expected in 2019-2020. Solar minimum is a phase when solar eruptions are increasingly rare, but history has shown that they can nonetheless be intense.

Footage of the Sept. 6 X2.2 and X9.3 solar flares captured by the Solar Dynamics Observatory in extreme ultraviolet light (131 angstrom wavelength)

Our Solar Dynamics Observatory satellite, which watches the Sun constantly, captured images of both X-class flares on Sept. 6.

Solar flares are classified according to their strength. X-class denotes the most intense flares, followed by M-class, while the smallest flares are labeled as A-class (near background levels) with two more levels in between. Similar to the Richter scale for earthquakes, each of the five levels of letters represents a 10-fold increase in energy output.

The first flare peaked at 5:10 a.m. EDT, while the second, larger flare, peaked at 8:02 a.m. EDT.

Footage of the Sept. 6 X2.2 and X9.3 solar flares captured by the Solar Dynamics Observatory in extreme ultraviolet light (171 angstrom wavelength) with Earth for scale

Solar flares are powerful bursts of radiation. Harmful radiation from a flare cannot pass through Earth’s atmosphere to physically affect humans on the ground, however — when intense enough — they can disturb Earth’s atmosphere in the layer where GPS and communications signals travel.

Both Sept. 6 flares erupted from an active region labeled AR 2673. This area also produced a mid-level solar flare on Sept. 4, 2017. This flare peaked at 4:33 p.m. EDT, and was about a tenth the strength of X-class flares like those measured on Sept. 6.

Footage of the Sept. 4 M5.5 solar flare captured by the Solar Dynamics Observatory in extreme ultraviolet light (131 angstrom wavelength)

This active region continues to produce significant solar flares. There were two flares on the morning of Sept. 7 as well.

For the latest updates and to see how these events may affect Earth, please visit NOAA’s Space Weather Prediction Center at http://spaceweather.gov, the U.S. government’s official source for space weather forecasts, alerts, watches and warnings.

Follow @NASASun on Twitter and NASA Sun Science on Facebook to keep up with all the latest in space weather research.

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com.

Jupiter’s Great Red Spot

Jupiter’s Great Red Spot (GRS) is an atmospheric storm that has been raging in Jupiter’s southern Hemisphere for at least 400 years.

About 100 years ago, the storm covered over 40,000 km of the surface. It is currently about one half of that size and seems to be shrinking.

At the present rate that it is shrinking it could become circular by 2040. The GRS rotates counter-clockwise(anti-cyclonic) and makes a full rotation every six Earth days.

It is not known exactly what causes the Great Red Spot’s reddish color. The most popular theory, which is supported by laboratory experiments, holds that the color may be caused by complex organic molecules, red phosphorus, or other sulfur compounds.

The GRS is about two to three times larger than Earth. Winds at its oval edges can reach up to 425 mph (680 km/h)

Infrared data has indicated that the Great Red Spot is colder (and thus, higher in altitude) than most of the other clouds on the planet

Sources: http://www.universetoday.com/15163/jupiters-great-red-spot/ http://www.space.com/23708-jupiter-great-red-spot-longevity.html

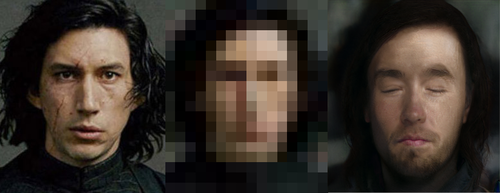

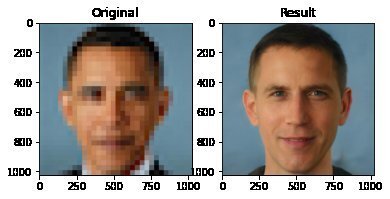

Depixellation? Or hallucination?

There’s an application for neural nets called “photo upsampling” which is designed to turn a very low-resolution photo into a higher-res one.

This is an image from a recent paper demonstrating one of these algorithms, called “PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models”

It’s the neural net equivalent of shouting “enhance!” at a computer in a movie - the resulting photo is MUCH higher resolution than the original.

Could this be a privacy concern? Could someone use an algorithm like this to identify someone who’s been blurred out? Fortunately, no. The neural net can’t recover detail that doesn’t exist - all it can do is invent detail.

This becomes more obvious when you downscale a photo, give it to the neural net, and compare its upscaled version to the original.

As it turns out, there are lots of different faces that can be downscaled into that single low-res image, and the neural net’s goal is just to find one of them. Here it has found a match - why are you not satisfied?

And it’s very sensitive to the exact position of the face, as I found out in this horrifying moment below. I verified that yes, if you downscale the upscaled image on the right, you’ll get something that looks very much like the picture in the center. Stand way back from the screen and blur your eyes (basically, make your own eyes produce a lower-resolution image) and the three images below will look more and more alike. So technically the neural net did an accurate job at its task.

A tighter crop improves the image somewhat. Somewhat.

The neural net reconstructs what it’s been rewarded to see, and since it’s been trained to produce human faces, that’s what it will reconstruct. So if I were to feed it an image of a plush giraffe, for example…

Given a pixellated image of anything, it’ll invent a human face to go with it, like some kind of dystopian computer system that sees a suspect’s image everywhere. (Building an algorithm that upscales low-res images to match faces in a police database would be both a horrifying misuse of this technology and not out of character with how law enforcement currently manipulates photos to generate matches.)

However, speaking of what the neural net’s been rewarded to see - shortly after this particular neural net was released, twitter user chicken3gg posted this reconstruction:

Others then did experiments of their own, and many of them, including the authors of the original paper on the algorithm, found that the PULSE algorithm had a noticeable tendency to produce white faces, even if the input image hadn’t been of a white person. As James Vincent wrote in The Verge, “It’s a startling image that illustrates the deep-rooted biases of AI research.”

Biased AIs are a well-documented phenomenon. When its task is to copy human behavior, AI will copy everything it sees, not knowing what parts it would be better not to copy. Or it can learn a skewed version of reality from its training data. Or its task might be set up in a way that rewards - or at the least doesn’t penalize - a biased outcome. Or the very existence of the task itself (like predicting “criminality”) might be the product of bias.

In this case, the AI might have been inadvertently rewarded for reconstructing white faces if its training data (Flickr-Faces-HQ) had a large enough skew toward white faces. Or, as the authors of the PULSE paper pointed out (in response to the conversation around bias), the standard benchmark that AI researchers use for comparing their accuracy at upscaling faces is based on the CelebA HQ dataset, which is 90% white. So even if an AI did a terrible job at upscaling other faces, but an excellent job at upscaling white faces, it could still technically qualify as state-of-the-art. This is definitely a problem.

A related problem is the huge lack of diversity in the field of artificial intelligence. Even an academic project with art as its main application should not have gone all the way to publication before someone noticed that it was hugely biased. Several factors are contributing to the lack of diversity in AI, including anti-Black bias. The repercussions of this striking example of bias, and of the conversations it has sparked, are still being strongly felt in a field that’s long overdue for a reckoning.

Bonus material this week: an ongoing experiment that’s making me question not only what madlibs are, but what even are sentences. Enter your email here for a preview.

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore

Hey guys, I’m observing a high school class and was looking at a textbook, and learned that irrationals are closed under addition! Super cool, who knew!

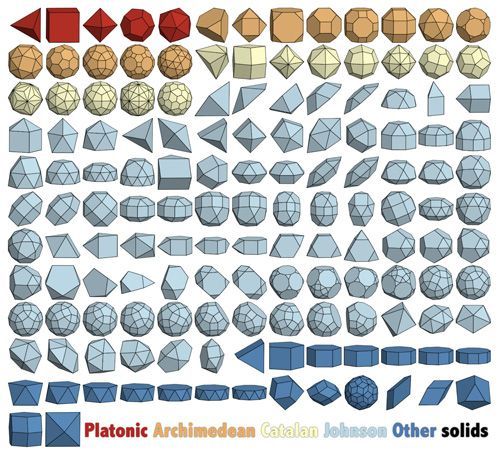

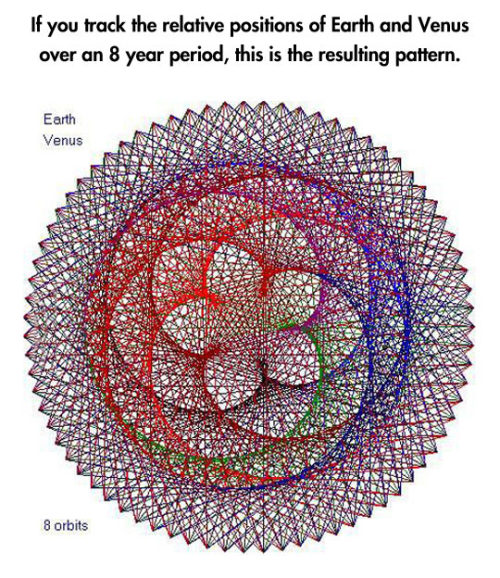

How is geometry entangled in the fabric of the Universe?

Geometry can be seen in action in all scales of the Universe, be it astronomical – in the orbital resonance of the planets for example, be it at molecular levels, in how crystals take their perfect structures. But going deeper and deeper into the fabric of the material world, we find that at quantum scale, geometry is a catalyst for many of the laws of quantum-physics and even definitions of reality.

Take for example the research made by Duncan Haldane, John Michael Kosterlitz and David Thouless, the winners of the 2016 Nobel prize in physics. By using geometry and topology they understood how exotic forms of matter take shape based on the effects of the quantum mechanics. Using techniques borrowed from geometry and topology, they studied the changes between states of matter (from plasma to gas, from gas to liquid and from liquid to solid) being able to generate a set of rules that explain different types of properties and behaviors of matter. Furthermore, by coming across a new type of symmetry patterns in quantum states that can influence those behaviors or even create new exotic types of matter, they provided new meaning and chance in using geometry as a study of “the real”.

If the Nobel Prize winners used abstract features of geometry to define physical aspects of matter, more tangible properties of geometry can be used to define other less tangible aspects of reality, like time or causality. In relativity, 3d space and 1d time become a single 4d entity called “space-time”, a dimension perceived through the eye of a space-time traveler. When an infinite number of travelers are brought into the equation, the numbers are adding up fast and, to see how for example, a space-time of a traveler looks for another traveler, we can use geometric diagrams of these equations. By tracing what a traveler “sees” in the space-time dimension and assuming that we all see the same speed of light, the intersection points of view between a stationary traveler and a moving one give a hyperbola that represents specific locations of space-time events seen by both travelers, no matter of their reference frame. These intersections represent a single value for the space-time interval, proving that the space-time dimension is dependent of the geometry given by the causality hyperbola.

If the abstract influence of geometry on the dimensions of reality isn’t enough, take for example one of the most interesting experiments of quantum-physics, the double-split experiment, were wave and particle functions are simultaneously proven to be active in light or matter. When a stream of photons is sent through a slit against a wall, the main expectation is that each photon will strike the wall in a straight line. Instead, the photons are rearranged by a specific type of patterns, called diffraction patterns, a behavior mostly visible for small particles like electrons, neutrons, atoms and small molecules, because of their short wavelength.

Two slits diffraction pattern by a plane wave. Animation by Fu-Kwun Hwang

The wave-particle duality is a handful, since it is a theory that has worked well in physics but with its meaning or representation never been satisfactorily resolved. Perhaps future experiments that will involve more abstract roles of geometry in quantum fields, like in the case of the Nobel prize winners, will develop new answers to how everything functions at these small scales of matter.

In this direction, Garret Lissi tries to explain how everything works, especially at quantum levels, by uniting all the forces of the Universe, its fibers and particles, into a 8 dimensions geometric structure. He suggests that each dot, or reference, in space-time has a shape, called fibre, attributed to each type of particle. Thus, a separate layer of space is created, parallel to the one we can perceive, given by these shapes and their interactions. Although the theory received also good reviews but also a widespread skepticism, its attempt to describe all known fundamental aspects of physics into one possible theory of everything is laudable. And proving that with laws and theories of geometry is one step closer to a Universal Geometry theory.

Interesting submission rk1232! Thanks for the heads up! :D :D

Everyone who reblogs this will get a pick-me-up in their ask box.

Every. Single. One. Of. You.

-

sozeugs liked this · 3 days ago

sozeugs liked this · 3 days ago -

alieba liked this · 1 month ago

alieba liked this · 1 month ago -

iseamusmoran reblogged this · 4 months ago

iseamusmoran reblogged this · 4 months ago -

g7-diagrams-and-frameworks reblogged this · 11 months ago

g7-diagrams-and-frameworks reblogged this · 11 months ago -

beachchairbookworm liked this · 1 year ago

beachchairbookworm liked this · 1 year ago -

culturesingularity reblogged this · 1 year ago

culturesingularity reblogged this · 1 year ago -

sentimentalrobots liked this · 1 year ago

sentimentalrobots liked this · 1 year ago -

4711-0815-00 liked this · 1 year ago

4711-0815-00 liked this · 1 year ago -

ncah-czerny liked this · 2 years ago

ncah-czerny liked this · 2 years ago -

rainsoftenings reblogged this · 2 years ago

rainsoftenings reblogged this · 2 years ago -

rlsthings liked this · 2 years ago

rlsthings liked this · 2 years ago -

iamwhatismissing liked this · 2 years ago

iamwhatismissing liked this · 2 years ago -

mischief-maker-me liked this · 2 years ago

mischief-maker-me liked this · 2 years ago -

eclectichellmouth reblogged this · 2 years ago

eclectichellmouth reblogged this · 2 years ago -

jackmarx-64 liked this · 2 years ago

jackmarx-64 liked this · 2 years ago -

victorimagebank liked this · 3 years ago

victorimagebank liked this · 3 years ago -

effdee1979 liked this · 3 years ago

effdee1979 liked this · 3 years ago -

pingoes liked this · 3 years ago

pingoes liked this · 3 years ago -

cephei1618 liked this · 4 years ago

cephei1618 liked this · 4 years ago -

wiseoldscreech reblogged this · 4 years ago

wiseoldscreech reblogged this · 4 years ago -

hanenicole3 reblogged this · 4 years ago

hanenicole3 reblogged this · 4 years ago -

lilmissfluffii liked this · 4 years ago

lilmissfluffii liked this · 4 years ago -

magneticmagic999 liked this · 4 years ago

magneticmagic999 liked this · 4 years ago -

strawberrypussymilk reblogged this · 4 years ago

strawberrypussymilk reblogged this · 4 years ago -

strawberrypussymilk liked this · 4 years ago

strawberrypussymilk liked this · 4 years ago -

clubqueen liked this · 4 years ago

clubqueen liked this · 4 years ago -

makinmuffins reblogged this · 4 years ago

makinmuffins reblogged this · 4 years ago -

jabbalovesbeer reblogged this · 4 years ago

jabbalovesbeer reblogged this · 4 years ago -

mcjicken liked this · 4 years ago

mcjicken liked this · 4 years ago -

rastafoogi liked this · 4 years ago

rastafoogi liked this · 4 years ago -

thepugman360 reblogged this · 4 years ago

thepugman360 reblogged this · 4 years ago -

emmy-award reblogged this · 4 years ago

emmy-award reblogged this · 4 years ago -

4v1v4v4 reblogged this · 4 years ago

4v1v4v4 reblogged this · 4 years ago -

gagle reblogged this · 5 years ago

gagle reblogged this · 5 years ago -

gagle liked this · 5 years ago

gagle liked this · 5 years ago -

exvini liked this · 5 years ago

exvini liked this · 5 years ago -

dulce-bomboncito-blog reblogged this · 5 years ago

dulce-bomboncito-blog reblogged this · 5 years ago -

147-moths liked this · 5 years ago

147-moths liked this · 5 years ago -

mourza liked this · 5 years ago

mourza liked this · 5 years ago -

xxlsweaters liked this · 5 years ago

xxlsweaters liked this · 5 years ago -

felipebatista liked this · 5 years ago

felipebatista liked this · 5 years ago -

mountaingoat0112 liked this · 5 years ago

mountaingoat0112 liked this · 5 years ago