Jupyterjones - Productivity Please !!!

More Posts from Jupyterjones and Others

Depixellation? Or hallucination?

There’s an application for neural nets called “photo upsampling” which is designed to turn a very low-resolution photo into a higher-res one.

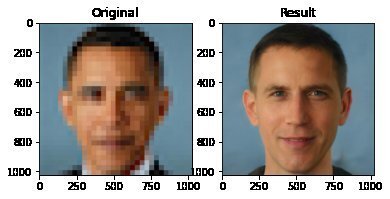

This is an image from a recent paper demonstrating one of these algorithms, called “PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models”

It’s the neural net equivalent of shouting “enhance!” at a computer in a movie - the resulting photo is MUCH higher resolution than the original.

Could this be a privacy concern? Could someone use an algorithm like this to identify someone who’s been blurred out? Fortunately, no. The neural net can’t recover detail that doesn’t exist - all it can do is invent detail.

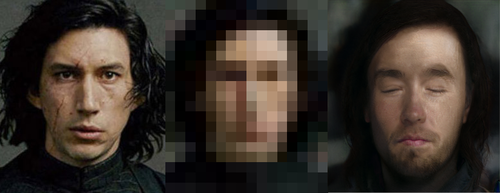

This becomes more obvious when you downscale a photo, give it to the neural net, and compare its upscaled version to the original.

As it turns out, there are lots of different faces that can be downscaled into that single low-res image, and the neural net’s goal is just to find one of them. Here it has found a match - why are you not satisfied?

And it’s very sensitive to the exact position of the face, as I found out in this horrifying moment below. I verified that yes, if you downscale the upscaled image on the right, you’ll get something that looks very much like the picture in the center. Stand way back from the screen and blur your eyes (basically, make your own eyes produce a lower-resolution image) and the three images below will look more and more alike. So technically the neural net did an accurate job at its task.

A tighter crop improves the image somewhat. Somewhat.

The neural net reconstructs what it’s been rewarded to see, and since it’s been trained to produce human faces, that’s what it will reconstruct. So if I were to feed it an image of a plush giraffe, for example…

Given a pixellated image of anything, it’ll invent a human face to go with it, like some kind of dystopian computer system that sees a suspect’s image everywhere. (Building an algorithm that upscales low-res images to match faces in a police database would be both a horrifying misuse of this technology and not out of character with how law enforcement currently manipulates photos to generate matches.)

However, speaking of what the neural net’s been rewarded to see - shortly after this particular neural net was released, twitter user chicken3gg posted this reconstruction:

Others then did experiments of their own, and many of them, including the authors of the original paper on the algorithm, found that the PULSE algorithm had a noticeable tendency to produce white faces, even if the input image hadn’t been of a white person. As James Vincent wrote in The Verge, “It’s a startling image that illustrates the deep-rooted biases of AI research.”

Biased AIs are a well-documented phenomenon. When its task is to copy human behavior, AI will copy everything it sees, not knowing what parts it would be better not to copy. Or it can learn a skewed version of reality from its training data. Or its task might be set up in a way that rewards - or at the least doesn’t penalize - a biased outcome. Or the very existence of the task itself (like predicting “criminality”) might be the product of bias.

In this case, the AI might have been inadvertently rewarded for reconstructing white faces if its training data (Flickr-Faces-HQ) had a large enough skew toward white faces. Or, as the authors of the PULSE paper pointed out (in response to the conversation around bias), the standard benchmark that AI researchers use for comparing their accuracy at upscaling faces is based on the CelebA HQ dataset, which is 90% white. So even if an AI did a terrible job at upscaling other faces, but an excellent job at upscaling white faces, it could still technically qualify as state-of-the-art. This is definitely a problem.

A related problem is the huge lack of diversity in the field of artificial intelligence. Even an academic project with art as its main application should not have gone all the way to publication before someone noticed that it was hugely biased. Several factors are contributing to the lack of diversity in AI, including anti-Black bias. The repercussions of this striking example of bias, and of the conversations it has sparked, are still being strongly felt in a field that’s long overdue for a reckoning.

Bonus material this week: an ongoing experiment that’s making me question not only what madlibs are, but what even are sentences. Enter your email here for a preview.

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore

Geometry and Divnity

Omnipresence

The Point

Divinity is present in every aspect of natural order and causality dimensions. It is part of the material world through various holistic and messianic incarnations, being at the same time a component of human thought and action. This kind of omnipresence is logical possible through the classical definition of a geometrical point, that is part of the entire space but does not occupy any of it.

Trinity

The Borromean Rings

While distinct and at the same time considered to be one in all else, the Three Divine Persons are sometimes represented by the Borromean Rings: no two of the three rings are linked with each other,but nonetheless all three are linked.

Divinity

Harmonic Proportion

The harmonic state of an element is associated with beauty, given by the equilibrium of its components. Nature is being perceived as beautiful, and its creator as good - inevitable and inherently in harmony with its creation.

Co-authored by physicists Ben Tippett* and David Tsang (non-fictional physicists at the fictional Gallifrey Polytechnic Institute and Gallifrey Institute of Technology, respectively), the paper – which you can access free of charge over on arXiv – presents a spacetime geometry that would make retrograde time travel possible. Such a spacetime geometry, write the researchers, would emulate “what a layperson would describe as a time machine“ [x]

What’s encrypting your internet surfing? An algorithm created by a supercomputer? Well, if the site you’re visiting is encrypted by the cyber security firm Cloudflare, your activity may be protected by a wall of lava lamps.

Cloudflare covers websites for Uber, OKCupid, & FitBit, for instance. The wall of lamps in the San Francisco headquarters generates a random code. Over 100 lamps, in a variety of colors, and their patterns deter hackers from accessing data.

As the lava lamps bubble and swirl, a video camera on the ceiling monitors their unpredictable changes and connects the footage to a computer, which converts the randomness into a virtually unhackable code.

Codes created by machines have relatively predictable patterns, so it’s possible for hackers to guess their algorithms, posing a security risk. Lava lamps, add to the equation the sheer randomness of the physical world, making it nearly impossible for hackers to break through.

You might think that this would be kept secret, but it’s not. Simply go in and ask to see the lava lamp display. By allowing people to affect the video footage, human movement, static, and changes in lighting from the windows work together to make the random code even harder to predict.

So, by standing in front of the display, you add an additional variable to the code, making it even harder to hack. Isn’t that interesting?

via atlasobscura.com

Permutations

Figuring out how to arrange things is pretty important.

Like, if we have the letters {A,B,C}, the six ways to arrange them are: ABC ACB BAC BCA CAB CBA

And we can say more interesting things about them (e.g. Combinatorics) another great extension is when we get dynamic

Like, if we go from ABC to ACB, and back…

We can abstract away from needing to use individual letters, and say these are both “switching the 2nd and 3rd elements,” and it is the same thing both times.

Each of these switches can be more complicated than that, like going from ABCDE to EDACB is really just 1->3->4->2->5->1, and we can do it 5 times and cycle back to the start

We can also have two switches happening at once, like 1->2->3->1 and 4->5->4, and this cycles through 6 times to get to the start.

Then, let’s extend this a bit further.

First, let’s first get a better notation, and use (1 2 3) for what I called 1->2->3->1 before.

Let’s show how we can turn these permutations into a group.

Then, let’s say the identity is just keeping things the same, and call it id.

And, this repeating thing can be extended into making the group combiner: doing one permutation and then the other. For various historical reasons, the combination of permutation A and then permutation B is B·A.

This is closed, because permuting all the things and then permuting them again still keeps 1 of all the elements in an order.

Inverses exist, because you just need to put everything from the new position into the old position to reverse it.

Associativity will be left as an exercise to the reader (read: I don’t want to prove it)

We Need Your Help to Find STEVE

Glowing in mostly purple and green colors, a newly discovered celestial phenomenon is sparking the interest of scientists, photographers and astronauts. The display was initially discovered by a group of citizen scientists who took pictures of the unusual lights and playfully named them “Steve.”

When scientists got involved and learned more about these purples and greens, they wanted to keep the name as an homage to its initial name and citizen science discoverers. Now it is STEVE, short for Strong Thermal Emission Velocity Enhancement.

Credit: ©Megan Hoffman

STEVE occurs closer to the equator than where most aurora appear – for example, Southern Canada – in areas known as the sub-auroral zone. Because auroral activity in this zone is not well researched, studying STEVE will help scientists learn about the chemical and physical processes going on there. This helps us paint a better picture of how Earth’s magnetic fields function and interact with charged particles in space. Ultimately, scientists can use this information to better understand the space weather near Earth, which can interfere with satellites and communications signals.

Want to become a citizen scientist and help us learn more about STEVE? You can submit your photos to a citizen science project called Aurorasaurus, funded by NASA and the National Science Foundation. Aurorasaurus tracks appearances of auroras – and now STEVE – around the world through reports and photographs submitted via a mobile app and on aurorasaurus.org.

Here are six tips from what we have learned so far to help you spot STEVE:

1. STEVE is a very narrow arc, aligned East-West, and extends for hundreds or thousands of miles.

Credit: ©Megan Hoffman

2. STEVE mostly emits light in purple hues. Sometimes the phenomenon is accompanied by a short-lived, rapidly evolving green picket fence structure (example below).

Credit: ©Megan Hoffman

3. STEVE can last 20 minutes to an hour.

4. STEVE appears closer to the equator than where normal – often green – auroras appear. It appears approximately 5-10° further south in the Northern hemisphere. This means it could appear overhead at latitudes similar to Calgary, Canada. The phenomenon has been reported from the United Kingdom, Canada, Alaska, northern US states, and New Zealand.

5. STEVE has only been spotted so far in the presence of an aurora (but auroras often occur without STEVE). Scientists are investigating to learn more about how the two phenomena are connected.

6. STEVE may only appear in certain seasons. It was not observed from October 2016 to February 2017. It also was not seen from October 2017 to February 2018.

Credit: ©Megan Hoffman

STEVE (and aurora) sightings can be reported at www.aurorasaurus.org or with the Aurorasaurus free mobile apps on Android and iOS. Anyone can sign up, receive alerts, and submit reports for free.

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com.

-

art-lover-genderhater liked this · 3 days ago

art-lover-genderhater liked this · 3 days ago -

fortitudinis reblogged this · 4 days ago

fortitudinis reblogged this · 4 days ago -

fortitudinis liked this · 4 days ago

fortitudinis liked this · 4 days ago -

petalboundtovanish liked this · 4 days ago

petalboundtovanish liked this · 4 days ago -

gentlyriseandfall liked this · 4 days ago

gentlyriseandfall liked this · 4 days ago -

circeswasteland reblogged this · 4 days ago

circeswasteland reblogged this · 4 days ago -

waytocatharsis liked this · 4 days ago

waytocatharsis liked this · 4 days ago -

stevensrogres liked this · 4 days ago

stevensrogres liked this · 4 days ago -

birdyaviary reblogged this · 4 days ago

birdyaviary reblogged this · 4 days ago -

hellycasey liked this · 4 days ago

hellycasey liked this · 4 days ago -

emilybaklava liked this · 4 days ago

emilybaklava liked this · 4 days ago -

ah-riadne liked this · 4 days ago

ah-riadne liked this · 4 days ago -

federal-bureau-of-nerds reblogged this · 4 days ago

federal-bureau-of-nerds reblogged this · 4 days ago -

julichris liked this · 4 days ago

julichris liked this · 4 days ago -

silver-fang23 reblogged this · 4 days ago

silver-fang23 reblogged this · 4 days ago -

silver-fang23 liked this · 4 days ago

silver-fang23 liked this · 4 days ago -

flamethrower-trombone reblogged this · 4 days ago

flamethrower-trombone reblogged this · 4 days ago -

shrimpscampii reblogged this · 4 days ago

shrimpscampii reblogged this · 4 days ago -

androidsvsvikings reblogged this · 4 days ago

androidsvsvikings reblogged this · 4 days ago -

aardbeienmelk liked this · 4 days ago

aardbeienmelk liked this · 4 days ago -

rad-maleficent reblogged this · 4 days ago

rad-maleficent reblogged this · 4 days ago -

myusernameistrashfr liked this · 4 days ago

myusernameistrashfr liked this · 4 days ago -

reigninggaze liked this · 4 days ago

reigninggaze liked this · 4 days ago -

togram-est-fatiguee liked this · 4 days ago

togram-est-fatiguee liked this · 4 days ago -

thekungfuhustler reblogged this · 4 days ago

thekungfuhustler reblogged this · 4 days ago -

solisen liked this · 4 days ago

solisen liked this · 4 days ago -

overtlydinosaurian liked this · 4 days ago

overtlydinosaurian liked this · 4 days ago -

motomami13 liked this · 4 days ago

motomami13 liked this · 4 days ago -

hellshotforgoodreasons liked this · 4 days ago

hellshotforgoodreasons liked this · 4 days ago -

august--and-everything-after liked this · 4 days ago

august--and-everything-after liked this · 4 days ago -

shikabane78 liked this · 4 days ago

shikabane78 liked this · 4 days ago -

swampwitch97 reblogged this · 4 days ago

swampwitch97 reblogged this · 4 days ago -

shedofrebellion-backup liked this · 4 days ago

shedofrebellion-backup liked this · 4 days ago -

imascreamapillar liked this · 4 days ago

imascreamapillar liked this · 4 days ago -

ismiseanpuca liked this · 4 days ago

ismiseanpuca liked this · 4 days ago -

etesienne reblogged this · 4 days ago

etesienne reblogged this · 4 days ago -

diagnosed-creative-type reblogged this · 4 days ago

diagnosed-creative-type reblogged this · 4 days ago -

diagnosed-creative-type liked this · 4 days ago

diagnosed-creative-type liked this · 4 days ago -

timeladyaerynjenkins liked this · 4 days ago

timeladyaerynjenkins liked this · 4 days ago -

emeritusprofessorofnothing liked this · 4 days ago

emeritusprofessorofnothing liked this · 4 days ago -

lucille-number-two liked this · 4 days ago

lucille-number-two liked this · 4 days ago -

radacach liked this · 4 days ago

radacach liked this · 4 days ago -

joyfullyoriginalcat reblogged this · 4 days ago

joyfullyoriginalcat reblogged this · 4 days ago -

timslayer reblogged this · 4 days ago

timslayer reblogged this · 4 days ago -

jennymf liked this · 4 days ago

jennymf liked this · 4 days ago -

fallaciousfeline liked this · 4 days ago

fallaciousfeline liked this · 4 days ago -

demons-pubis reblogged this · 4 days ago

demons-pubis reblogged this · 4 days ago -

enchantingcheesecakepersona liked this · 4 days ago

enchantingcheesecakepersona liked this · 4 days ago